Computing bias refers to the unintended prejudices or unfair outcomes in algorithms and software systems due to flawed data, design choices, or inherent societal biases. These biases can manifest in areas like hiring, facial recognition, or predictive algorithms, resulting in discriminatory or inaccurate decisions. Understanding and addressing computing bias is crucial to ensure fairness and inclusivity in technological advancements. Developers must be aware of bias sources and implement strategies like diverse data sets, bias audits, and transparency to mitigate these impacts in computing systems.

Learning Objectives

For the topic of Computing Bias in AP Computer Science Principles, you should focus on understanding how biases arise in algorithms, especially from biased data and flawed design. Learn to identify different types of bias, such as data, sampling, and label bias, and explore real-world examples of bias in technology. Additionally, study methods for mitigating bias, including diverse data collection, algorithm testing, and transparency in design. Finally, grasp the ethical implications and societal impacts of bias in computing systems.

Computing Bias – AP Computer Science Principles

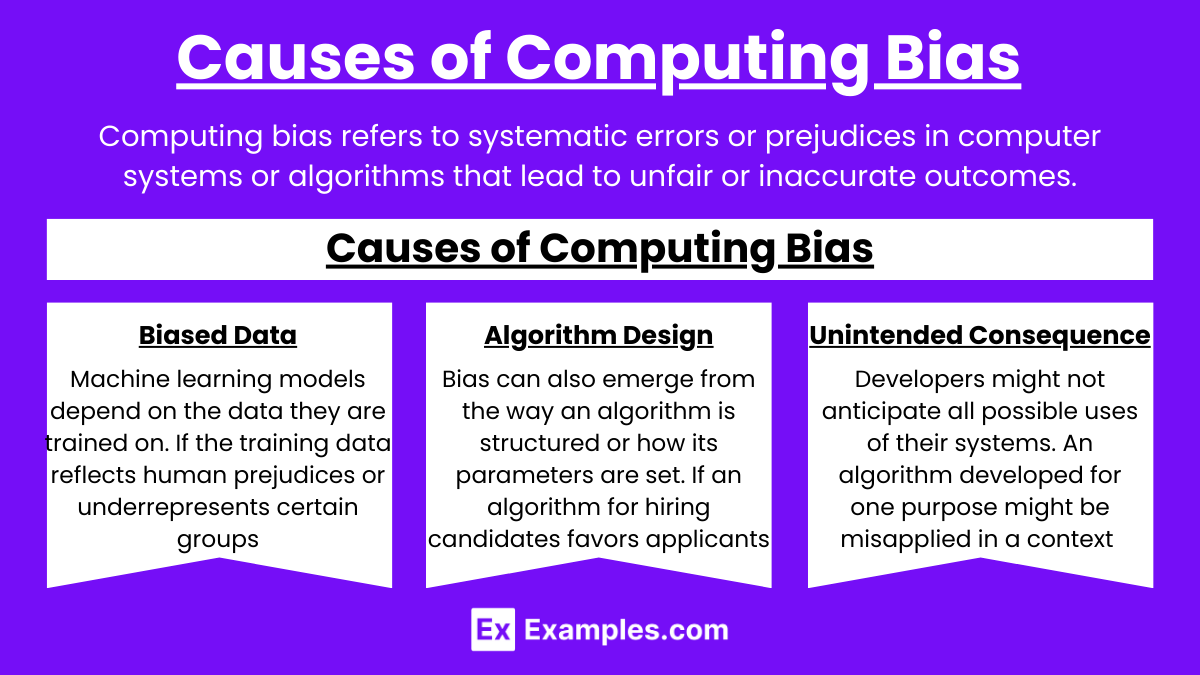

Computing bias refers to systematic errors or prejudices in computer systems or algorithms that lead to unfair or inaccurate outcomes. Bias can arise from the design, development, or deployment of software, affecting various decision-making processes, from hiring algorithms to facial recognition.

Causes of Computing Bias

- Biased Data: Machine learning models depend on the data they are trained on. If the training data reflects human prejudices or underrepresents certain groups, the algorithm may perpetuate these biases.

- Example: A facial recognition system trained predominantly on lighter-skinned faces may struggle to accurately recognize individuals with darker skin.

- Algorithm Design: Bias can also emerge from the way an algorithm is structured or how its parameters are set.

- Example: If an algorithm for hiring candidates favors applicants from specific educational backgrounds without considering other qualifications, it may lead to biased outcomes.

- Unintended Consequences: Developers might not anticipate all possible uses of their systems. An algorithm developed for one purpose might be misapplied in a context where it behaves unfairly.

- Example: A credit scoring system may inadvertently penalize people from lower-income areas if it factors in ZIP codes.

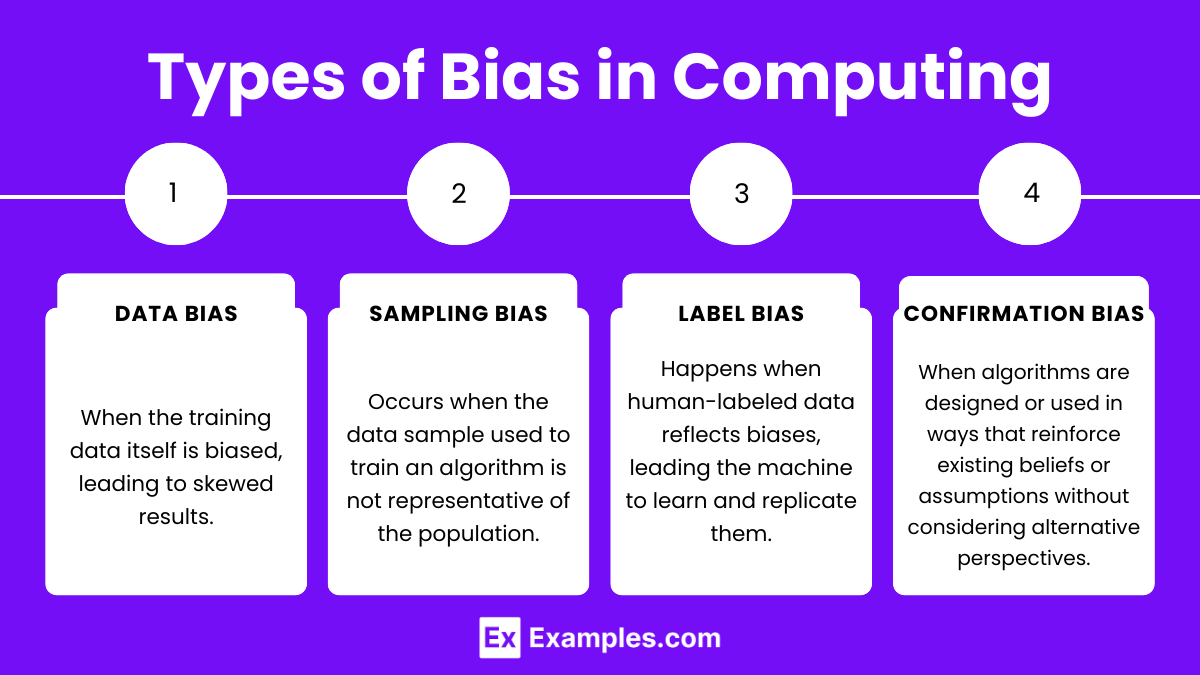

Types of Bias in Computing

- Data Bias: When the training data itself is biased, leading to skewed results. For example, an algorithm trained on historical hiring data may inherit the biases present in past hiring decisions.

- Sampling Bias: Occurs when the data sample used to train an algorithm is not representative of the population. A sample that excludes certain groups can result in skewed outcomes.

- Label Bias: Happens when human-labeled data reflects biases, leading the machine to learn and replicate them.

- Example: In an image classification task, if labels are applied inconsistently across certain demographic groups, the model may develop biased associations.

- Confirmation Bias: When algorithms are designed or used in ways that reinforce existing beliefs or assumptions without considering alternative perspectives.

- Example: A search engine that tailors results to confirm a user’s existing beliefs may deepen societal divides.

Mitigating Computing Bias

- Diverse Data: Ensuring that training data includes a wide range of examples representing different groups and situations is essential to minimizing bias.

- Bias Audits: Regularly testing algorithms for bias and reviewing their outcomes across different demographic groups can help identify and mitigate biases.

- Transparent Design: Providing transparency into how algorithms make decisions, including the data and criteria used, helps users and stakeholders assess the fairness of those decisions.

- Human Oversight: Algorithms should not replace human judgment entirely, especially in critical areas like law enforcement, healthcare, or hiring. Human review can help catch unintended biases.

- Inclusive Development Teams: Having diverse teams involved in the development process can reduce bias by incorporating different perspectives and experiences.

Examples

Example 1: Facial Recognition Bias

Facial recognition technologies have been found to exhibit racial and gender biases. Studies have shown that these systems are more accurate at identifying white male faces but struggle significantly with accuracy when recognizing individuals with darker skin tones or women. This bias arises due to the lack of diversity in the datasets used to train the algorithms, where certain demographics are underrepresented. This can lead to misidentifications and wrongful accusations in law enforcement contexts, making the use of such technology controversial and raising concerns about racial profiling.

Example 2: Hiring Algorithm Bias

Some companies have adopted AI-powered algorithms to help screen job applicants. However, these systems have been found to perpetuate gender and racial biases present in historical hiring practices. For example, if an algorithm is trained using data from a company that historically hired more men than women, the AI might inadvertently favor male candidates, especially if it correlates certain terms or educational backgrounds more closely with male applicants. This can result in discriminatory hiring decisions, affecting diversity in the workplace.

Example 3: Predictive Policing Bias

Predictive policing algorithms are designed to identify areas or individuals that are more likely to experience or commit crimes. However, these systems have faced criticism for reinforcing racial biases present in historical crime data. For instance, if a certain neighborhood has been over-policed in the past, the algorithm might suggest increased policing in that area, even when it’s not justified. This could lead to a feedback loop where already marginalized communities face disproportionate scrutiny, exacerbating social inequalities.

Example 4: Credit Scoring Bias

Credit scoring algorithms are used to determine a person’s creditworthiness, but they can inadvertently discriminate against certain groups. These systems often use proxies like ZIP codes, which may correlate with socio-economic status or race, to evaluate a person’s likelihood of defaulting on a loan. As a result, individuals from historically underprivileged communities may receive lower credit scores, not because of their actual financial behavior but because of biased metrics in the algorithm.

Example 5: Search Engine Bias

Search engines can exhibit bias by tailoring search results based on users’ previous behavior, which can reinforce existing beliefs and opinions, a phenomenon known as confirmation bias. For example, if a user frequently searches for content that aligns with a particular political ideology, the search engine’s algorithm may prioritize results that reinforce that perspective, while suppressing opposing views. This can contribute to the formation of echo chambers, where individuals are exposed only to information that aligns with their preexisting beliefs, reducing exposure to diverse perspectives.

Multiple Choice Questions

Question 1

Which of the following is the primary cause of computing bias in machine learning algorithms?

A) Inadequate hardware

B) Biased training data

C) Insufficient user feedback

D) Lack of algorithm testing

Answer: B) Biased training data

Explanation: The most common cause of computing bias in machine learning algorithms is biased training data. Machine learning models learn from the data they are trained on. If this data contains biases (such as overrepresentation of certain groups or skewed labels), the model will learn and replicate these biases in its decision-making. Inadequate hardware (A), insufficient user feedback (C), and lack of algorithm testing (D) can cause other issues, but they are not the primary cause of bias.

Question 2

Which type of computing bias occurs when an algorithm reinforces existing beliefs or assumptions by consistently showing similar results?

A) Sampling bias

B) Confirmation bias

C) Label bias

D) Data bias

Answer: B) Confirmation bias

Explanation: Confirmation bias happens when algorithms consistently reinforce existing beliefs or assumptions, often by presenting information or results that align with the user’s current preferences or views. For example, a search engine that tailors its results to confirm what a user already believes contributes to the reinforcement of those beliefs. Sampling bias (A) occurs when the data sample is not representative, label bias (C) arises from inconsistently labeled data, and data bias (D) occurs when biased data is used in the training process.

Question 3

Which of the following strategies is most effective in mitigating computing bias in algorithmic decision-making?

A) Using only historical data for training

B) Increasing the algorithm’s complexity

C) Conducting regular bias audits

D) Eliminating human oversight

Answer: C) Conducting regular bias audits

Explanation: Conducting regular bias audits is one of the most effective ways to identify and mitigate bias in algorithms. These audits involve evaluating an algorithm’s decisions across different demographic groups to ensure fairness. Relying only on historical data (A) can perpetuate existing biases, increasing the algorithm’s complexity (B) does not necessarily reduce bias, and eliminating human oversight (D) could lead to unchecked biases in important decision-making processes. Regular audits allow developers to address and fix biases that may emerge over time.