Parallel and Distributed Computing involves breaking down complex problems into smaller tasks that can be executed simultaneously. In parallel computing, multiple processors handle tasks at the same time, improving efficiency and speed. Distributed computing spreads tasks across multiple computers connected through a network, each working independently while communicating to achieve a common goal. These methods are essential for handling large-scale computations and data processing in fields like scientific research, cloud computing, and big data analytics, making them vital concepts in modern computing systems.

Learning Objectives

In studying Parallel and Distributed Computing for AP Computer Science Principles, you should learn how problems are broken into smaller tasks that can be processed simultaneously to increase computational efficiency. Understand key concepts like concurrency, parallelism, and the differences between parallel and distributed systems. Focus on grasping how speedup, scalability, and fault tolerance are achieved in distributed systems. Additionally, recognize the importance of load balancing and communication in distributed computing environments, and study practical applications such as MapReduce for large-scale data processing.

Introduction to Parallel and Distributed Computing

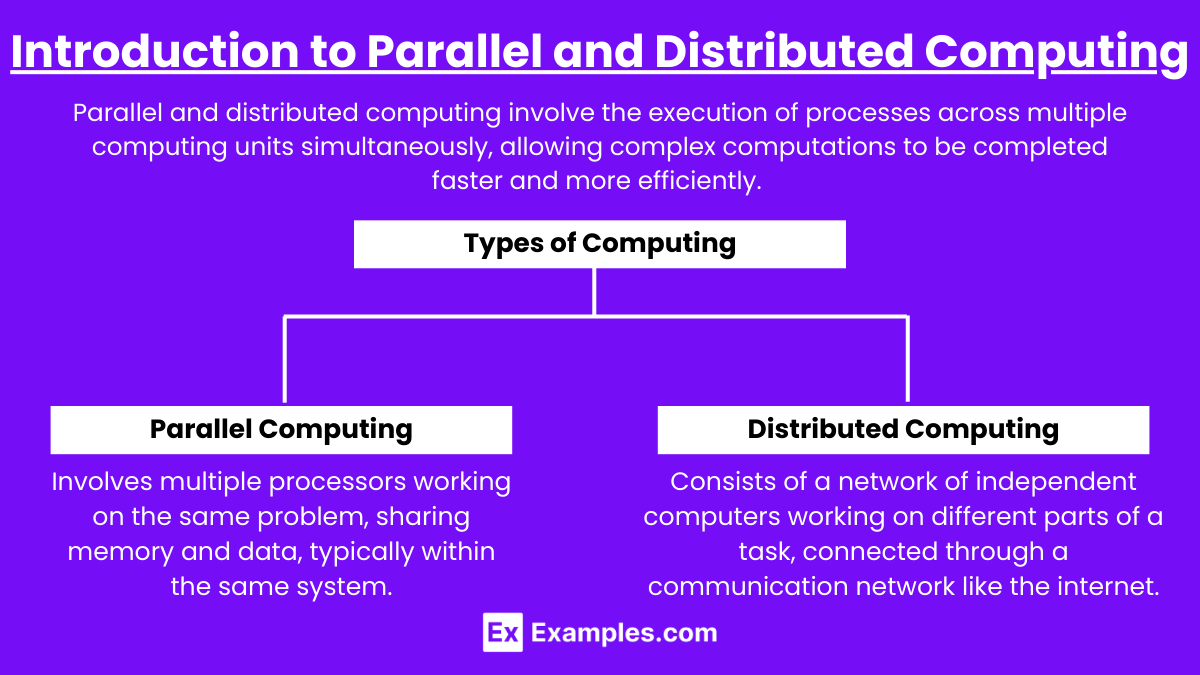

Parallel and distributed computing involve the execution of processes across multiple computing units simultaneously, allowing complex computations to be completed faster and more efficiently. The key difference between the two lies in the architecture:

- Parallel Computing: Involves multiple processors working on the same problem, sharing memory and data, typically within the same system.

- Distributed Computing: Consists of a network of independent computers working on different parts of a task, connected through a communication network like the internet.

Parallel Computing

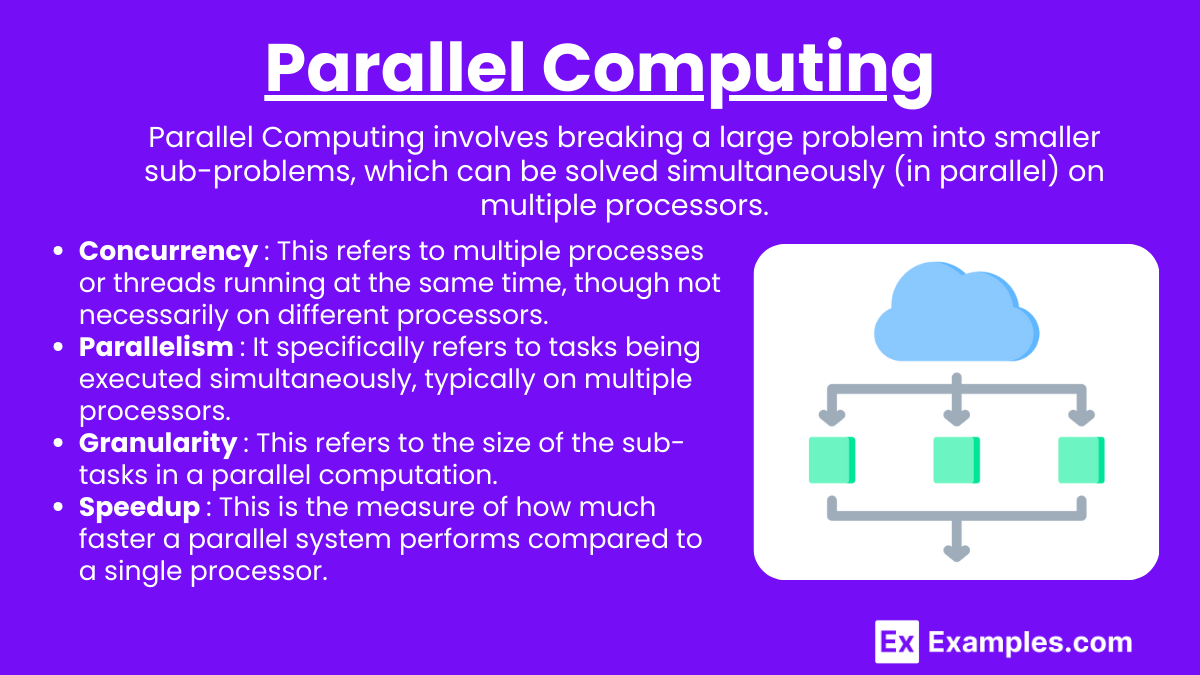

Parallel Computing involves breaking a large problem into smaller sub-problems, which can be solved simultaneously (in parallel) on multiple processors. This approach can significantly speed up computation, especially for tasks that can be broken into independent parts. The main idea is that multiple tasks are executed at the same time using different processors or cores.

Key concepts:

- Concurrency: This refers to multiple processes or threads running at the same time, though not necessarily on different processors. Concurrency focuses on the correct sequencing and coordination of tasks.

- Parallelism: It specifically refers to tasks being executed simultaneously, typically on multiple processors. Parallelism can reduce the time required to solve a problem by dividing the task into smaller sub-tasks, which can be processed simultaneously.

- Granularity: This refers to the size of the sub-tasks in a parallel computation. Fine-grained parallelism involves smaller sub-tasks, while coarse-grained parallelism involves larger sub-tasks. Fine-grained tasks require more frequent communication between processors, while coarse-grained tasks require less communication but can be harder to divide.

- Speedup: This is the measure of how much faster a parallel system performs compared to a single processor. Ideally, the speedup increases linearly with the number of processors, though in practice this is not always the case due to factors like communication overhead.

Distributed Computing

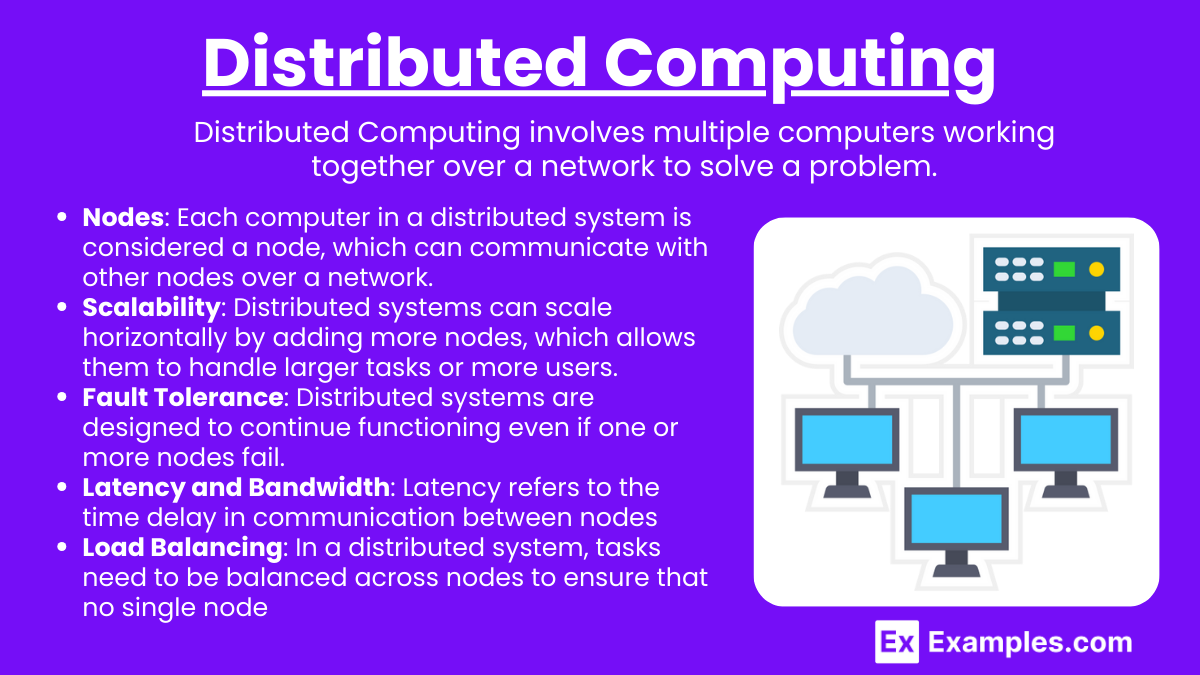

Distributed Computing involves multiple computers working together over a network to solve a problem. Each computer (often called a “node”) works on a portion of the problem, and the results are combined to form the final solution.

Key concepts:

- Nodes: Each computer in a distributed system is considered a node, which can communicate with other nodes over a network. These nodes work independently but communicate to exchange information and combine results.

- Scalability: Distributed systems can scale horizontally by adding more nodes, which allows them to handle larger tasks or more users.

- Fault Tolerance: Distributed systems are designed to continue functioning even if one or more nodes fail. The system can reroute tasks to other nodes to ensure the problem-solving process is not interrupted.

- Latency and Bandwidth: Latency refers to the time delay in communication between nodes, while bandwidth refers to the amount of data that can be transferred in a given amount of time. High latency and low bandwidth can slow down distributed systems.

- Load Balancing: In a distributed system, tasks need to be balanced across nodes to ensure that no single node is overwhelmed while others are idle.

Benefits of Parallel and Distributed Computing

- Speedup: Both parallel and distributed computing systems provide significant reductions in processing time, especially for complex tasks or large datasets.

- Resource Efficiency: Efficiently utilizes computing resources, such as CPU cores, clusters, and networks, minimizing idle time.

- Problem Solving: Enables handling of large-scale computations that a single processor could not perform, such as real-time simulations, massive data analysis, and scientific computing.

Examples

Example 1: Weather Forecasting

Weather simulations involve complex computations that require vast amounts of data to predict climate patterns. In parallel computing, the simulation area is divided into smaller regions, and each region is processed simultaneously by different processors. In distributed computing, weather data from various locations can be collected and processed by multiple computers around the world, combining the results to create accurate weather models.

Example 2: Search Engines

Google and other search engines use distributed computing to handle vast amounts of data generated by billions of web searches. The process of indexing the web is divided among thousands of servers. Parallel computing techniques are used to crawl and analyze multiple web pages simultaneously, while distributed systems ensure that different clusters of servers handle searches efficiently, even if some fail or become overloaded.

Example 3: 3D Animation Rendering

In the entertainment industry, rendering high-quality 3D animations for movies or video games involves rendering millions of individual frames. Parallel computing allows different frames or portions of a scene to be rendered at the same time by multiple processors, significantly reducing the overall time to complete the animation. Distributed systems in rendering farms distribute tasks to several computers across a network, ensuring efficiency and scalability.

Example 4: Cryptocurrency Mining

Mining cryptocurrencies like Bitcoin relies heavily on parallel and distributed computing. Mining involves solving complex mathematical puzzles that require intensive computation. Parallel computing allows miners to try multiple solutions at once, speeding up the process. Distributed computing, with miners across the globe contributing their computing power, helps ensure the security and integrity of the cryptocurrency network.

Example 5: Online Multiplayer Gaming

Games like Fortnite or Call of Duty use distributed computing to support large numbers of players simultaneously. Different servers handle different regions or tasks within the game, such as player matchmaking or world rendering. Parallel computing ensures that computations related to physics, graphics, and player interactions happen concurrently, providing a smooth gaming experience for users across the globe.

Multiple Choice Questions

Question 1

Which of the following is a key difference between parallel and distributed computing?

A. Parallel computing uses multiple computers, while distributed computing uses multiple processors within a single computer.

B. Parallel computing focuses on executing tasks simultaneously within a single system, while distributed computing involves multiple systems working together over a network.

C. Parallel computing cannot handle large tasks, but distributed computing can.

D. Parallel computing is always slower than distributed computing.

Correct Answer: B

Explanation: In parallel computing, multiple processors or cores within a single system work on different parts of a problem simultaneously. In distributed computing, multiple independent systems (or nodes) work together over a network to solve a larger problem. The key distinction is that parallel computing typically involves a single system with multiple processors, while distributed computing involves multiple systems working together.

Question 2

What is the primary advantage of using distributed computing in large-scale applications?

A. It reduces the amount of processing power needed by using a single node.

B. It allows tasks to be executed faster by distributing the workload across multiple independent systems.

C. It eliminates the need for communication between nodes, saving bandwidth.

D. It always results in linear speedup without any delay.

Correct Answer: B

Explanation: Distributed computing enables large-scale applications to be processed faster by distributing the workload across multiple independent systems (nodes). Each node handles a portion of the task, which reduces the overall processing time. However, communication between nodes is still necessary, and the speedup may not always be linear due to factors like network latency and overhead.

Question 3

Which of the following best describes MapReduce in the context of distributed computing?

A. It is a method where one large task is divided into smaller tasks, solved sequentially.

B. It is a technique where tasks are divided into smaller sub-tasks, processed in parallel, and then combined to form a final result.

C. It is an approach where tasks are processed only on a single node to reduce communication overhead.

D. It is a scheduling method for single-core processors.

Correct Answer: B

Explanation: MapReduce is a model used in distributed computing where a task is divided into smaller sub-tasks (the “Map” phase), processed independently across multiple nodes, and then the results are combined in the “Reduce” phase to produce the final output. This approach is highly efficient for processing large data sets across multiple systems.