Understanding probability, thermal equilibrium, and entropy is essential for mastering the principles of thermodynamics and achieving a high score on the AP Physics exam. These topics cover the statistical behavior of particles in a system, how systems reach equilibrium, and the measure of disorder or randomness in a system. Below are detailed notes along with five examples to help you excel in these topics.

Free AP Physics 2: Algebra-Based Practice Test

Learning Objectives

By studying probability, thermal equilibrium, and entropy for the AP Physics exam, you will learn to calculate the likelihood of various outcomes in physical systems, understand how systems reach a state of balance where temperatures equalize, and grasp the principles governing disorder and energy distribution. Mastering these concepts will enable you to analyze real-world scenarios, solve related mathematical problems, and comprehend the fundamental laws of thermodynamics that dictate the behavior of systems in thermal physics.

Probability

Probability in Thermodynamics: Probability is used to describe the likelihood of finding particles in a particular state or configuration. In thermodynamics, it helps predict the behavior of large numbers of particles.

Key Points:

Microstates: Specific detailed configurations of a system.

Macrostates: Descriptions of a system in terms of macroscopic properties (e.g., temperature, pressure).

Boltzmann Distribution

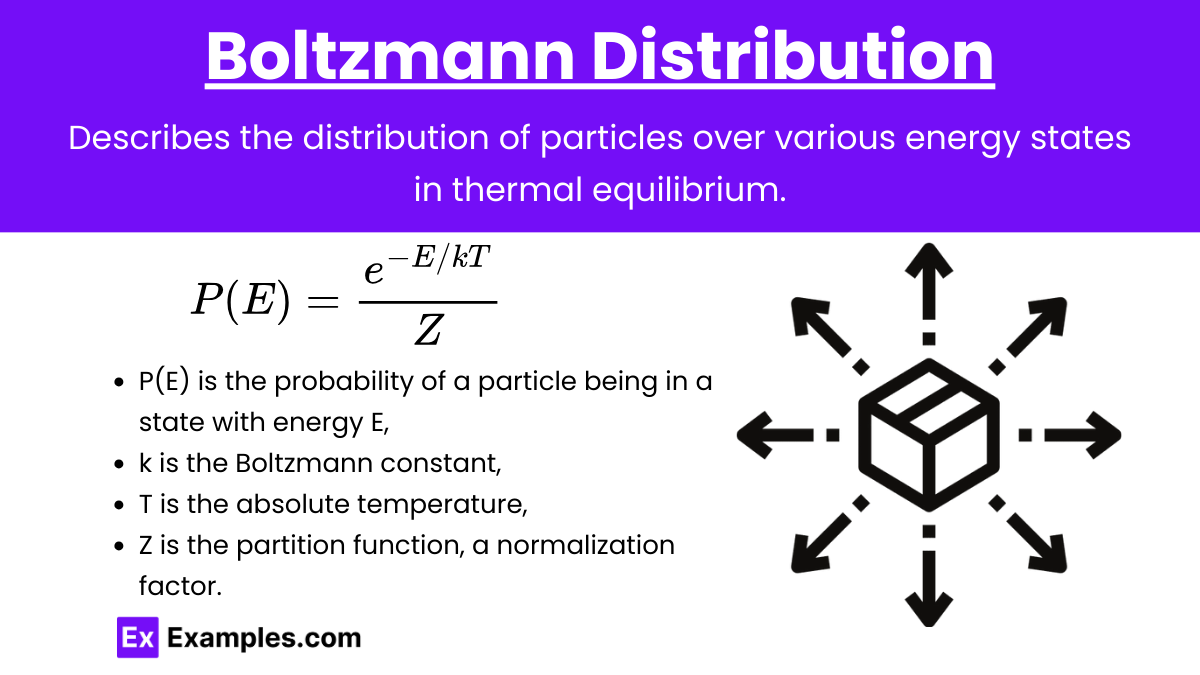

Boltzmann Distribution: Describes the distribution of particles over various energy states in thermal equilibrium.

Formula: P(E) = \frac{e^{-E/kT}}{Z}

where:

P(E) is the probability of a particle being in a state with energy E,

k is the Boltzmann constant,

T is the absolute temperature,

Z is the partition function, a normalization factor.

Thermal Equilibrium

Thermal Equilibrium: A state in which all parts of a system have the same temperature and no net heat flows between them.

Key Points:

When two systems are in thermal contact, they exchange heat until they reach the same temperature.

At thermal equilibrium, the temperature is uniform throughout the system.

Zeroth Law of Thermodynamics

Zeroth Law of Thermodynamics: If two systems are each in thermal equilibrium with a third system, they are in thermal equilibrium with each other.

Entropy

Entropy (S): A measure of the disorder or randomness in a system. It quantifies the number of possible microstates corresponding to a macrostate.

Key Points:

Higher entropy means greater disorder.

Entropy tends to increase in natural processes (Second Law of Thermodynamics).

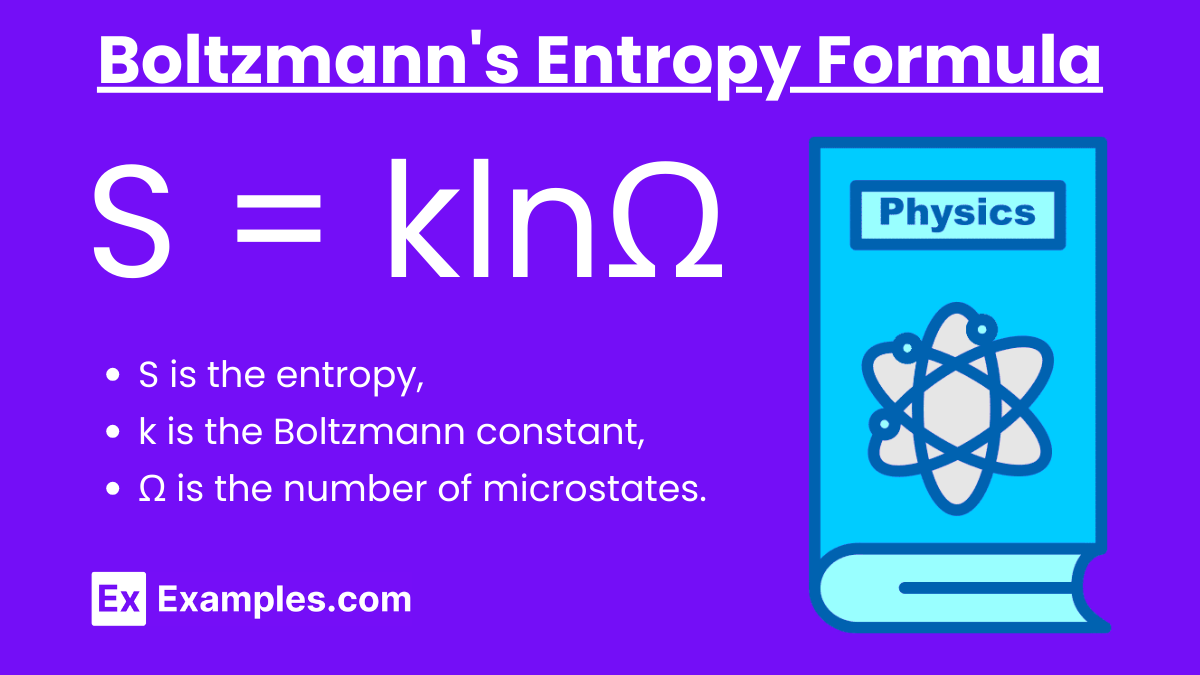

Boltzmann's Entropy Formula

Boltzmann's Entropy Formula: S = klnΩ

where:

S is the entropy,

k is the Boltzmann constant,

Ω is the number of microstates.

Second Law of Thermodynamics

Second Law of Thermodynamics: The total entropy of an isolated system always increases over time, approaching a maximum value at equilibrium.

Examples

Example 1: Probability in Energy States

Scenario: Calculate the probability of a particle being in a state with energy E = 2kT at temperature T.

Solution: Using Boltzmann distribution:

P(E) = \frac{e^{-E/kT}}{Z} P(2kT) = \frac{e^{-2kT/kT}}{Z} = \frac{e^{-2}}{Z}

The exact probability depends on the partition function Z.

Example 2: Reaching Thermal Equilibrium

Scenario: Two blocks of metal, one at 300 K and the other at 500 K, are placed in contact. What will be the final temperature at thermal equilibrium?

Solution: The blocks will exchange heat until they reach the same temperature. The final temperature depends on their masses and specific heat capacities, but conceptually, they will reach a common temperature between 300 K and 500 K.

Example 3: Entropy Change in Mixing

Scenario: Calculate the change in entropy when 1 mole of an ideal gas expands isothermally and reversibly from volume V₁ to volume V₂.

Solution: \Delta S = nR \ln \left( \frac{V_2}{V_1} \right) \text{For 1 mole:} \Delta S = R \ln \left( \frac{V_2}{V_1} \right)

Example 4: Entropy Increase

Scenario: Explain the entropy change when ice melts into water at 0°C.

Solution: When ice melts, the structured arrangement of water molecules in ice changes to a more disordered state in liquid water. Therefore, entropy increases.

Example 5: Entropy and Probability

Scenario: A system has four microstates with equal probability. Calculate the entropy.

Solution: Number of microstates (Ω) = 4

S = k \ln \Omega = k \ln 4 \text{If } k \approx 1.38 \times 10^{-23} \, \text{J/K}: S \approx 1.38 \times 10^{-23} \ln 4 \approx 1.91 \times 10^{-23} \, \text{J/K}

Practice Problems

Question 1: Probability

A box contains 3 red balls, 4 blue balls, and 5 green balls. If one ball is drawn at random, what is the probability that the ball is either red or green?

\text{A) } \frac{1}{2} \\ \text{B) } \frac{2}{3} \\ \text{C) } \frac{3}{5} \\ \text{D) } \frac{4}{5} \\ \text{Answer: B) } \frac{2}{3}

Explanation:

To find the probability of drawing either a red or green ball, we first determine the total number of balls:

Total balls = 3 (red)+4 (blue)+5 (green) = 12

Next, we find the total number of favorable outcomes (red or green balls):

Red balls=3

Green balls=5

Favorable outcomes=3+5=8

The probability is given by the ratio of favorable outcomes to the total number of outcomes:

P(\text{Red or Green}) = \frac{8}{12} = \frac{2}{3} \text{Thus, the correct answer is } \frac{2}{3}.

Question 2: Thermal Equilibrium

Two objects, A and B, are in thermal contact. Object A has a temperature of 400 K, and object B has a temperature of 300 K. Which of the following statements is true when they reach thermal equilibrium?

A) The temperature of both objects will be 400 K

B) The temperature of both objects will be 300 K

C) The temperature of both objects will be 350 K

D) The temperature of both objects will be the same but not necessarily 350 K

Answer: D) The temperature of both objects will be the same but not necessarily 350 K

Explanation:

When two objects are in thermal contact, heat will flow from the hotter object to the cooler one until they reach thermal equilibrium, at which point they will have the same temperature. However, the final temperature depends on the specific heat capacities and masses of the objects.

Since we are not given the specific heat capacities or masses, we cannot determine the exact final temperature. We only know that the temperatures will be equal.

Thus, the correct answer is that the temperature of both objects will be the same but not necessarily 350 K.

Question 3: Entropy

Which of the following statements correctly describes the concept of entropy in a thermodynamic system?

A) Entropy measures the total energy in a system.

B) Entropy always decreases in an isolated system.

C) Entropy measures the disorder or randomness of a system.

D) Entropy remains constant during any reversible process.

Answer: C) Entropy measures the disorder or randomness of a system

Explanation:

Entropy is a measure of the disorder or randomness of a system. In thermodynamics, it is a state function that quantifies the amount of energy in a system that is not available to do work. The second law of thermodynamics states that the entropy of an isolated system never decreases; it either increases or remains constant for a reversible process.

Therefore, the correct description of entropy is that it measures the disorder or randomness of a system.

Thus, the correct answer is that entropy measures the disorder or randomness of a system.