Basics of Multiple Regression and Underlying Assumptions

- Notes

For CFA Level 2 candidates, mastering the basics of multiple regression and its underlying assumptions is vital. Understanding these concepts allows for robust analysis of financial data and decision-making based on statistical inference, enhancing skills in predictive modeling and valuation crucial for the Quantitative Methods section of the exam.

Learning Objectives

In studying the “Basics of Multiple Regression and Underlying Assumptions” for the CFA exam, you should aim to thoroughly comprehend the formulation and application of multiple regression in financial contexts, understand the fundamental assumptions that ensure its validity, and become adept at identifying, diagnosing, and remedying any violations of these assumptions. This comprehensive understanding will enable you to interpret regression results accurately and apply these insights effectively to real-world financial data. Additionally, you will evaluate how violations of these assumptions can influence the conclusions of a regression analysis and learn how to implement corrective measures to enhance the reliability of your models.

Introduction to Multiple Regression

- Definition and Use: Multiple regression is a statistical technique that explains the relationship between one dependent variable and two or more independent variables.

- Application in Finance: Used for predicting financial outcomes, evaluating investment opportunities, and assessing risk factors.

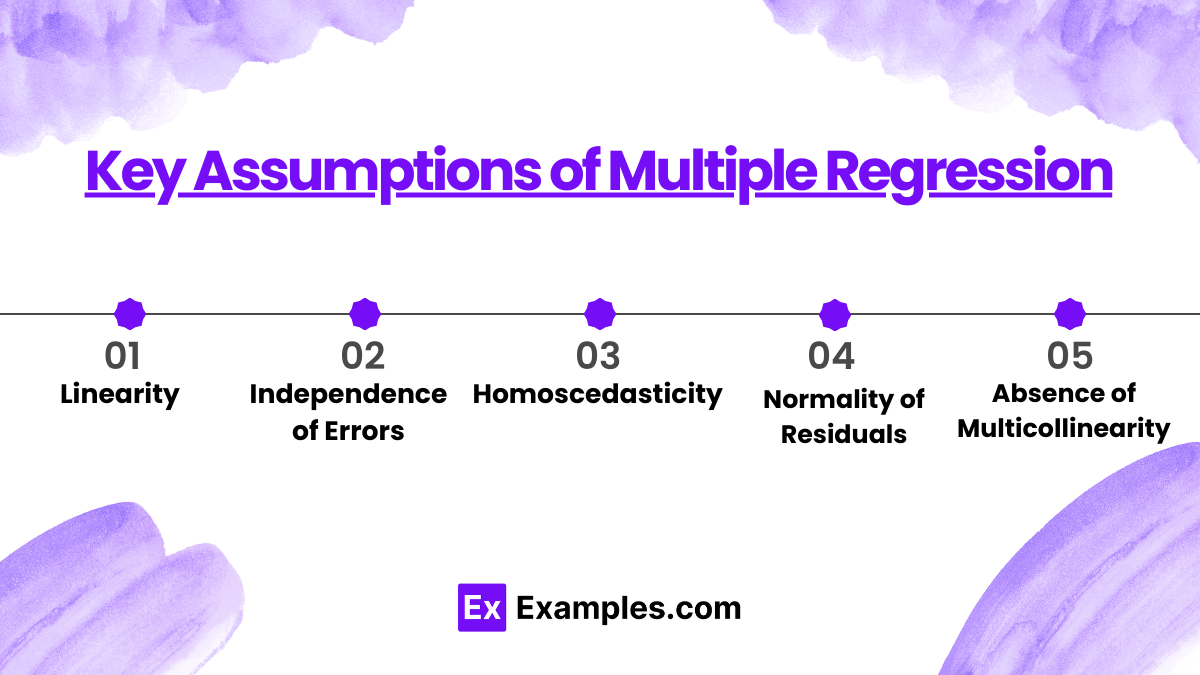

Key Assumptions of Multiple Regression

- Linearity: The relationship between dependent and independent variables should be linear.

- Independence of Errors: Observations should be independent of each other.

- Homoscedasticity: Constant variance of residuals across all levels of independent variables.

- Normality of Residuals: Residuals should be normally distributed.

- Absence of Multicollinearity: Independent variables should not be highly correlated.

Testing and Diagnosing Assumptions

- Residual Plots: Used to detect non-linearity, heteroscedasticity, and outliers.

- Durbin-Watson Statistic: Tests for independence of errors.

- VIF (Variance Inflation Factor): Assesses the presence of multicollinearity.

Interpreting Regression Outputs

- Coefficients and P-Values: Understand how to interpret the size and significance of regression coefficients.

- R-Squared and Adjusted R-Squared: Evaluate the goodness of fit of the regression model.

Dealing with Violations

- Transformation of Variables: Address non-linearity and non-normality.

- Adding Interaction Terms: Manage complex relationships among variables.

- Ridge or Lasso Regression: Techniques to reduce multicollinearity.

Examples

Example 1: Predicting Stock Returns Using Multiple Regression

Multiple regression analysis can be employed to predict stock returns by incorporating variables like market capitalization, price-to-earnings (P/E) ratio, and sector performance. This approach allows analysts to quantify the impact of various financial indicators and market conditions on stock prices, providing a more nuanced understanding of investment opportunities.

Example 2: Credit Risk Modeling with Multiple Regression

Credit risk models utilize multiple regression to assess the likelihood of default by analyzing borrower-specific data such as income, credit history, and loan characteristics. This method helps financial institutions determine risk levels associated with lending, enabling them to make informed credit decisions and manage loan portfolios effectively.

Example 3: Real Estate Pricing Analysis

Multiple regression is vital for modeling real estate prices by evaluating factors like location, size, age, and property condition. This statistical technique helps real estate analysts predict property values based on multiple input variables, offering a comprehensive tool for investment analysis and pricing strategy development.

Example 4: Portfolio Performance Analysis

By applying multiple regression, financial analysts can explore how different asset allocations influence portfolio performance and risk. This analysis provides insights into the relative contributions of various assets to the portfolio’s returns and volatility, assisting portfolio managers in optimizing investment strategies.

Example 5: Economic Forecasting Using Multiple Regression

Multiple regression models are crucial for forecasting economic indicators such as GDP growth, inflation rates, and unemployment by analyzing a range of macroeconomic variables. This predictive capability is essential for government agencies and financial institutions in planning and policy-making processes, helping anticipate economic trends and adjust strategies accordingly.

Practice Questions

Question 1

What does the Durbin-Watson statistic test in a regression model?

A) Linearity of the relationship

B) Independence of the residuals

C) Homoscedasticity of residuals

D) Normality of residuals

Answer: B) Independence of the residuals

Explanation: The Durbin-Watson statistic tests for the presence of autocorrelation in the residuals of a regression model. A value close to 2.0 suggests no autocorrelation, while values deviating significantly from 2.0 indicate positive or negative autocorrelation.

Question 2

Which issue is directly addressed by checking the Variance Inflation Factor (VIF) in a regression analysis?

A) Residual outliers

B) Multicollinearity among predictors

C) Heteroscedasticity

D) Model overfitting

Answer: B) Multicollinearity among predictors

Explanation: VIF is used to quantify the extent of correlation among independent variables in the model. High VIF values indicate high multicollinearity, which can inflate the variances of the parameter estimates and make the model unreliable.

Question 3

Which assumption of multiple regression is violated if the residuals increase as the value of predictors increases?

A) Independence of errors

B) Linearity

C) Homoscedasticity

D) Normality

Answer: C) Homoscedasticity

Explanation: Homoscedasticity assumes that the variance of the residuals is constant across all levels of the independent variables. If residuals increase with the predictors, it indicates heteroscedasticity, violating this assumption.